|

|

|

|

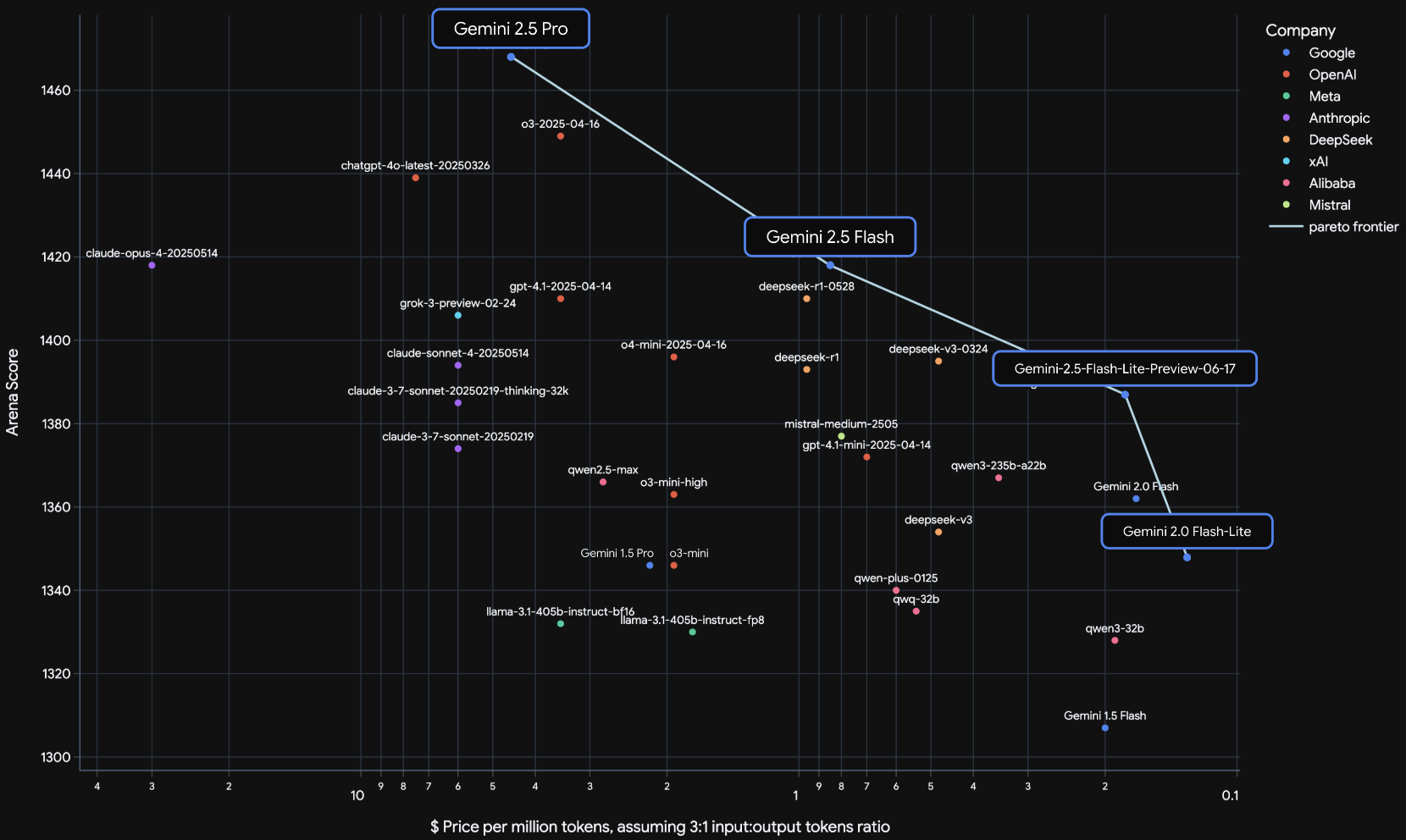

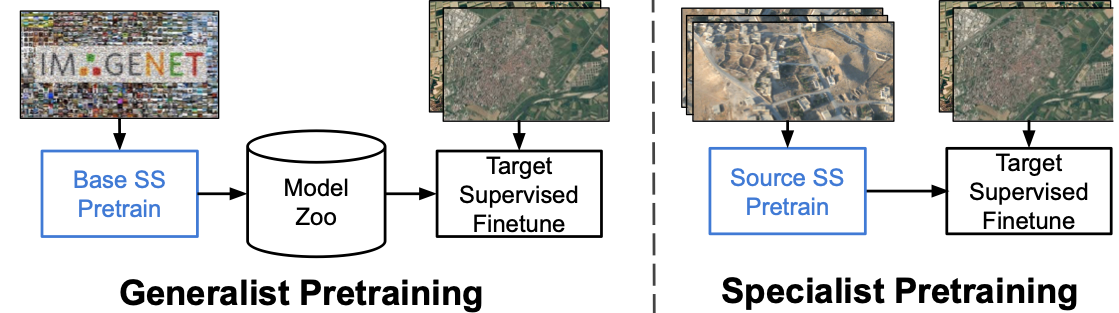

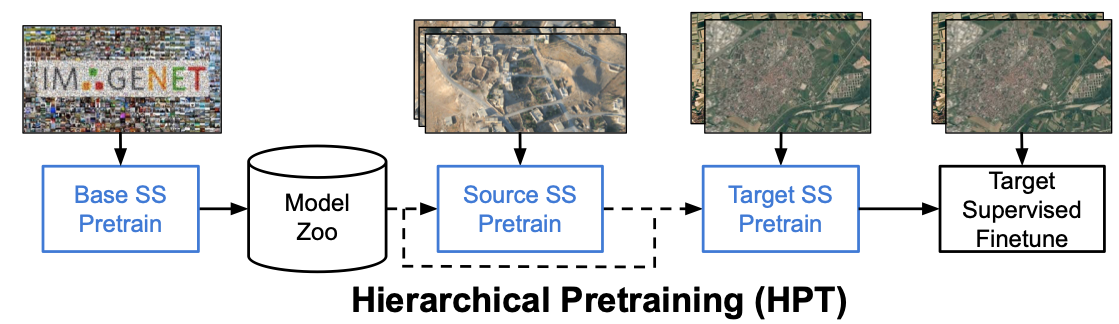

My research is on large-scale multi-modal generation and understanding. I have been a core contributor of Gemini 2.5 and Veo 2.0 and 3.0. During my PhD, I focused on efficient learning and domain adaptation in computer vision. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Continual Learning with Neural Networks Sayna Ebrahimi; Spring 2020 [Computer Science] Mechanical Behavior of Materials at Multiscale: Peridynamic Theory and Learning-based Approaches Sayna Ebrahimi; Spring 2020 [Mechcanical Engineering] |

|

|